Deep RL class - huggingface

par Thomas Simonini

- Unit 1 - Introduction to Deep Reinforcement Learning

- Unit 2 - Introduction to Q-Learning

- 📖 part 1 - we learned about the value-based methods and the difference between Monte Carlo and Temporal Difference Learning. Then a quizz (easy one)

- 📖 part 2 - and then Q-learning which is an off-policy value-based method that uses a TD approach to train its action-value function. Then a quizz (less easier)

- 👩💻 hands-on. 1st algo (FrozenLake) is published in Guillaume63/q-FrozenLake-v1-4x4-noSlippery. 2nd algo (Taxi) is published in Guillaume63/q-Taxi-v3. Leaderboard is here

- Unit 3 - Deep Q-Learning with Atari Games

- Unit 4 - An Introduction to Unity ML-Agents with Hugging Face 🤗

- Unit 5 - Policy Gradient with PyTorch

Didn’t mention that but I have started The Hugging Face Deep Reinforcement Learning Class by Thomas Simonini.

Thomas is now part of HuggingFace.

1st step is to fork the repo, and for mine it is here.

And clone it locally: git clone git@github.com:castorfou/deep-rl-class.git ou git clone https://github.com/castorfou/deep-rl-class.git

I followed the 1st unit in May/11.

there is a community on discord at https://discord.gg/aYka4Yhff9, with a lounge about RL.

Unit 1 - Introduction to Deep Reinforcement Learning

📖 It starts with some general introduction to deep RL and then a quizz.

👩💻 1st practice uses this lunar lander environment, and you train a PPO agent to get the highest score,

- and this runs on colab : https://github.com/huggingface/deep-rl-class/blob/main/unit1/unit1.ipynb (just by clicking on

)

- there is a leaderboard running under huggingface (one can publish models to huggingface) https://huggingface.co/spaces/chrisjay/Deep-Reinforcement-Learning-Leaderboard . Just need an huggingface account for that (used my Michelin account)

A guide has been recently added explaining how to tune hyperparameters using optuna. 👉 https://github.com/huggingface/deep-rl-class/blob/main/unit1/unit1_optuna_guide.ipynb. Should do it!

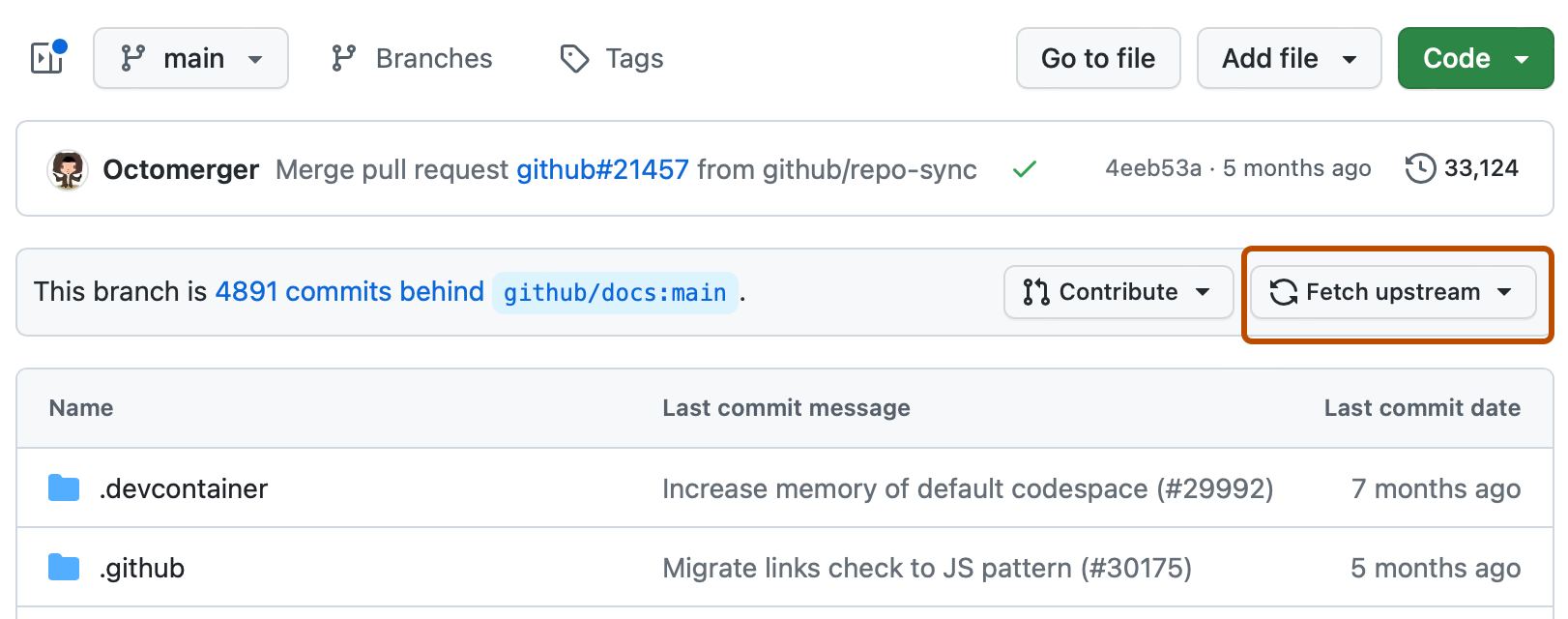

To start unit2. Introduction to Q-Learning

- first update from fork just by clicking

- and update your local repo (

git fetchgit pull)

Unit 2 - Introduction to Q-Learning

📖 part 1 - we learned about the value-based methods and the difference between Monte Carlo and Temporal Difference Learning. Then a quizz (easy one)

📖 part 2 - and then Q-learning which is an off-policy value-based method that uses a TD approach to train its action-value function. Then a quizz (less easier)

👩💻 hands-on. 1st algo (FrozenLake) is published in Guillaume63/q-FrozenLake-v1-4x4-noSlippery. 2nd algo (Taxi) is published in Guillaume63/q-Taxi-v3. Leaderboard is here

Unit 3 - Deep Q-Learning with Atari Games

📖 The Deep Q-Learning chapter 👾 👉 https://huggingface.co/blog/deep-rl-dqn

👩💻 Start the hands-on here 👉 https://colab.research.google.com/github/huggingface/deep-rl-class/blob/main/unit3/unit3.ipynb

from discord, a video (30’) by Antonin Raffin about Automatic Hyperparameter Optimization @ ICRA 22 - Tools for Robotic RL 6/8. Never thought about it that way, it can help to speed training phase.

from discord as well a video to build a doom ai model (3 hours!)

and from discord a lecture from Pieter Abbeel explaining Q-value to DQN and why we have this double network at L2 Deep Q-Learning (Foundations of Deep RL Series. This is part of a larger lecture available at Foundations of Deep RL – 6-lecture series by Pieter Abbeel

And then a video explaining Deep RL at the Edge of the Statistical Precipice. This was from a paper at Neurips.

Unit 4 - An Introduction to Unity ML-Agents with Hugging Face 🤗

📖 tutorial 👉 https://link.medium.com/KOpvPdyz4qb

Thomas starts with evolutions on RL domain, citing Decision Transformers as one of the last hot topic. And then introduces Unity and how it can now be used with RL agents.

Interesting idea to introduce curiosity and to make it real as an intrinsic reward.

Note: It guided me to gentle introductions to cross-entropy for machine learning and information entropy.

Low Probability Event (surprising): More information. High entropy.

Higher Probability Event (unsurprising): Less information. Low entropy.

Skewed Probability Distribution (unsurprising): Low entropy.

Balanced Probability Distribution (surprising): High entropy.

Cross-Entropy and KL divergence are similar but not exactly the same. Specifically, the KL divergence measures a very similar quantity to cross-entropy. It measures the average number of extra bits required to represent a message with Q instead of P, not the total number of bits.

👩💻 Here are the steps for the training:

- clone repo and install environment

# from ~/git/guillaume

git clone https://github.com/huggingface/ml-agents/

# bug with python 3.9 - https://github.com/Unity-Technologies/ml-agents/issues/5689

conda create --name ml-agents python=3.8

conda activate ml-agents

# Go inside the repository and install the package

cd ml-agents

pip install -e ./ml-agents-envs

pip install -e ./ml-agents

- download the Environment Executable (pyramids from google drive)

Unzip it and place it inside the MLAgents cloned repo in a new folder called trained-envs-executables/linux

- modify nbr of steps to 1000000 in

config/ppo/PyramidsRND.yaml - train

mlagents-learn config/ppo/PyramidsRND.yaml --env=training-envs-executables/linux/Pyramids/Pyramids --run-id="First Training" --no-graphics

- monitor training

tensorboard --logdir results --port 6006

(auto reload is off by default this day, click settings and check Reload data) (because I have installed v2.3.0 and not 2.4.0, there is no autofit domain to data and it is annoying)

- push to 🤗 Hub

Create a new token (https://huggingface.co/settings/tokens) with write role

Copy the token, Run this and past the token huggingface-cli login

Push to Hub

mlagents-push-to-hf --run-id='First Training' --local-dir='results/First Training' --repo-id='Guillaume63/MLAgents-Pyramids' --commit-message='Trained pyramids agent upload'

and now I can play it from https://huggingface.co/Guillaume63/MLAgents-Pyramids and watch your Agent play…

Unit 5 - Policy Gradient with PyTorch

1️⃣ 📖 Read Policy Gradient with PyTorch Chapter.

Advantage and disadvantage of policy gradient vs DQN.

Reinforce algorithm (Monte Carlo policy gradient): it uses an estimated return from an entire episode to update the policy parameters.

The output of it is a probability distribution of actions. And we try to maximize J(θ) which is this estimated return. (details of Policy Gradient theorem in this video from Pieter Abbeel)

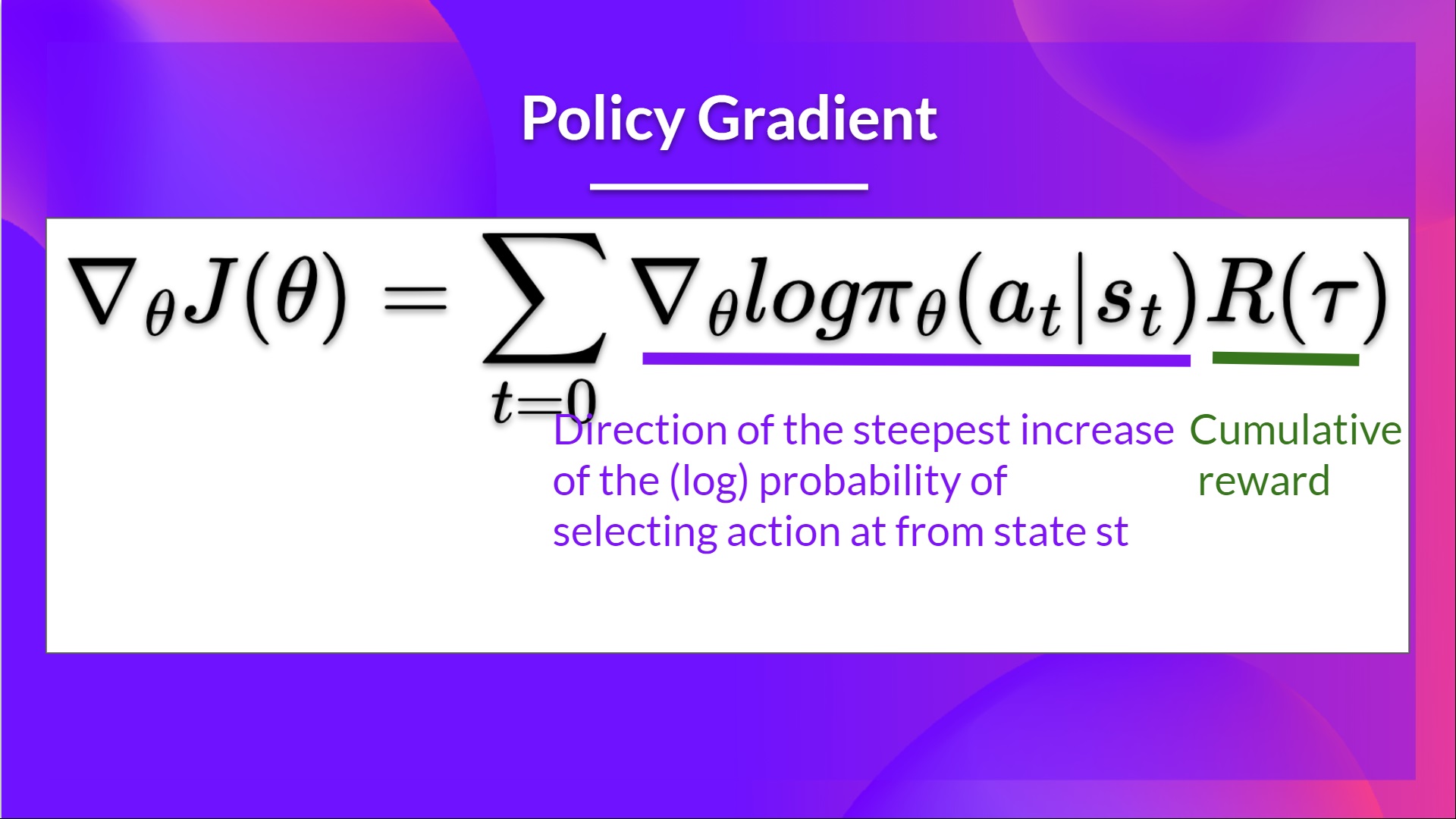

We will update weights using this gradient:

-

$\nabla_\theta\log\pi_\theta(a_t | s_t)$ is the direction of steepest increase of the (log) probability of selecting action at from state $s_t$. => This tells use how we should change the weights of policy if we want to increase/decrease the log probability of selecting action at state $s_t$.

-

$R(\tau)$ is the scoring function:

- If the return is high, it will push up the probabilities of the (state, action) combinations.

- Else, if the return is low, it will push down the probabilities of the (state, action) combinations.

2️⃣ 👩💻 Then dive on the hands-on where you’ll code your first Deep Reinforcement Learning algorithm from scratch: Reinforce.

👉 https://colab.research.google.com/github/huggingface/deep-rl-class/blob/main/unit5/unit5.ipynb

1st model is Cartpole. After training on 10’000 episodes, perfect score of 500 +- 0. Thomas pointed me to a video (3h) from Aniti where Antonin Raffin gives some tips and tricks. And points to many papers such as Deep Reinforcement Learning that Matters (in zotero)

2nd model is Pixelcopter. High level of variance in perf. Recommended by Thomas to tune hyper parameters (optuna?).

3rd model is Pong.